About

I am a postdoctoral research associate in the Division of Applied Mathematics at Brown University working with Paul Dupuis. My research interests lie broadly in the mathematics of machine learning for analyzing and developing novel generative modeling algorithms from the perspectives of mathematical control theory and mean-field games. Conversely, I also develop theoretically well-grounded methods in rare event simulation for dynamical systems and sampling methods for Bayesian computation using tools from generative machine learning. I was previously a postdoctoral research associate the Department of Mathematics and Statistics at UMass Amherst working with Markos Katsoulakis, Luc Rey-Bellet.

I earned my PhD in Computational Science and Engineering from MIT in 2022. My advisor was Youssef Marzouk who heads the Uncertainty Quantification group. I earned my Master’s degree in Aeronautics & Astronautics at MIT in 2017, and my Bachelor’s degrees in Engineering Physics and Applied Mathematics at UC Berkeley in 2015. I was a MIT School of Engineering 2019-2020 Mathworks Fellow. I spent the summer of 2017 as a research intern at United Technologies Research Center (now Raytheon), where I worked with Tuhin Sahai on novel queuing systems.

Recent News

October:

Our paper Transport map unadjusted Langevin algorithms has been accepted for publication in Foundations of Data Science!

New preprint on structure-preserving generative modeling! In Equivariant score-based generative models provably learn distributions with symmetries efficiently, we prove generalization bounds for equivariant score-based generative modeling and provably show that the frequently applied practice of data augmentation is inferior to using an explicitly equivariant score function when learning distributions invariant to a group. This work builds upon the PDE theory approach to analysis of generative models Score-based generative models are provably robust: an uncertainty quantification perspective. This is joint work with Ziyu Chen and Markos Katsoulakis.

Happy to announce that our paper Score-based generative models are provably robust: an uncertainty quantification perspective has been accepted to NeurIPS 2024 through the Main Track!

Upcoming Events

July

I will be attending and presenting in the IPAM Workshop on Sampling, Inference, and Data-Driven Physical Modeling in Scientific Machine Learning.

May I will be attending and presenting in the SIAM Conference on Applications of Dynamical Systems in the minisymposium on Collective Dynamics in Multi-Agent Systems: Advances in Learning and Optimization. I will be presenting work on mean-field games and generative modeling.

March I am attending and presenting in the SIAM Conference on Computational Science and Engineering in the minisymposium on Addressing intractability in optimal control. I am presenting recent ongoing work on ergodic control through interacting particle systems and generative modeling tools.

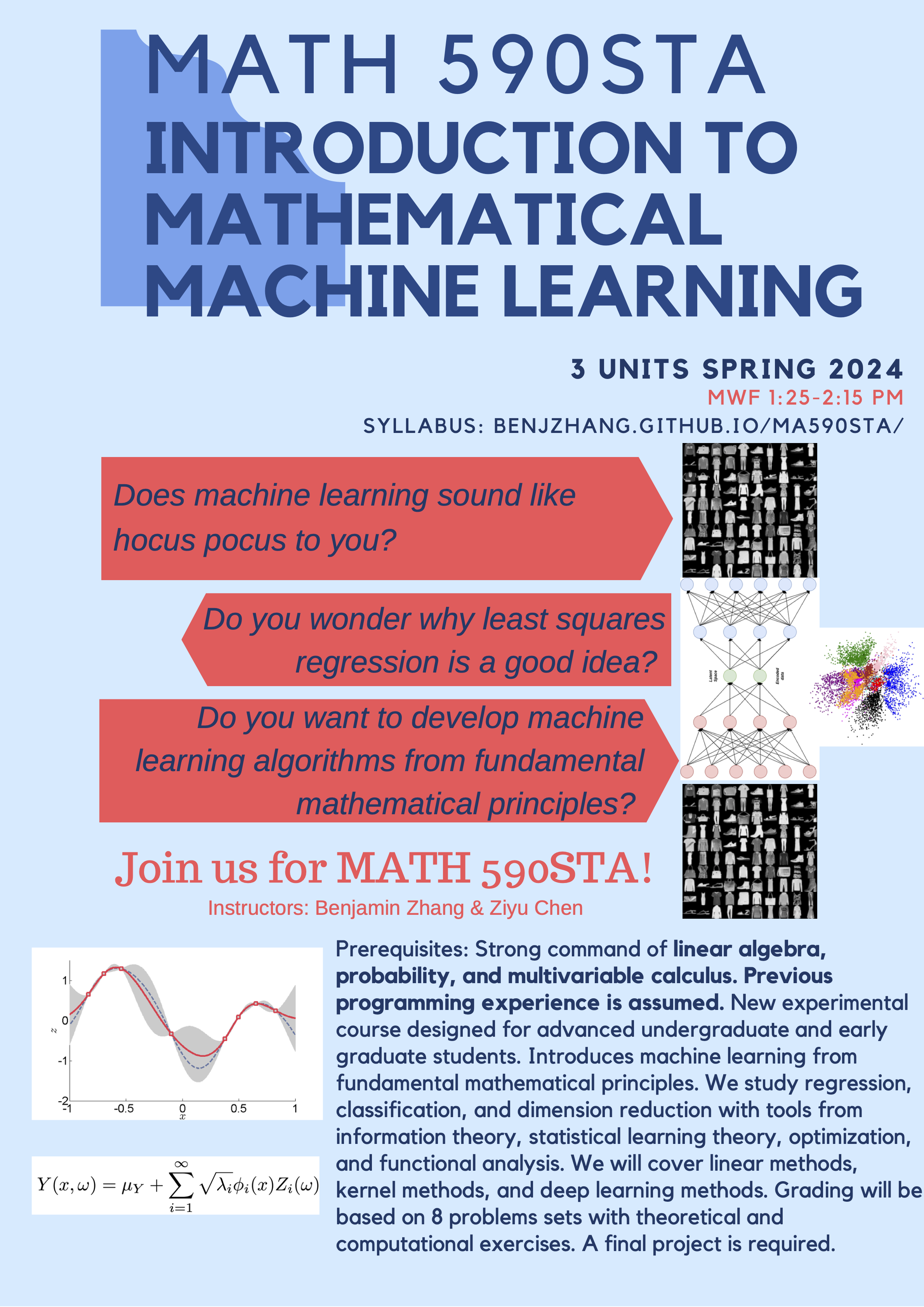

APMA 1930Z: Introduction to Mathematical Machine Learning (Fall 2024)

I will be co-teaching APMA 1930Z at Brown University in Fall 2024. APMA 1930Z is the second iteration of Math 590STA, first offered at UMass Amherst in Spring 2024. We cover classical solutions to machine learning tasks such as regression, classification, and dimension reduction from fundamental mathematical concepts.

I will be co-teaching APMA 1930Z at Brown University in Fall 2024. APMA 1930Z is the second iteration of Math 590STA, first offered at UMass Amherst in Spring 2024. We cover classical solutions to machine learning tasks such as regression, classification, and dimension reduction from fundamental mathematical concepts.