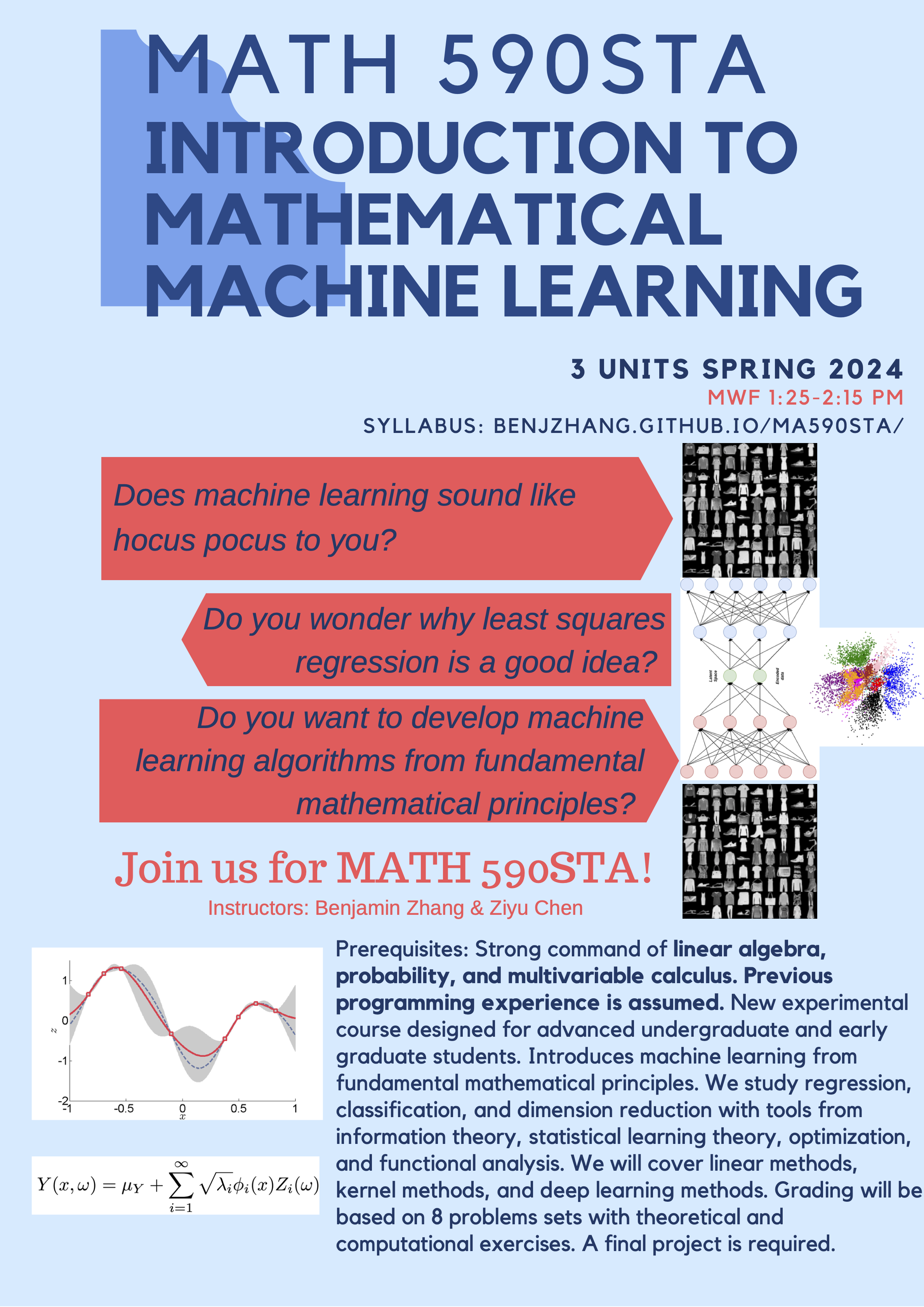

MATH 590STA: Introduction to Mathematical Machine Learning

A final syllabus and course schedule has been posted to Canvas

Spring 2024

MWF 1:25-2:15PM in LGRT 121

Instructors and contact

Benjamin Zhang, LGRT 1632, bjzhang@umass.edu

Ziyu Chen, LGRT 1630, ziyuchen@umass.edu

Course description

This course will provide an introduction to machine learning from a mathematical perspective. The primary objective of this course is to cultivate in students a sense of mathematical curiosity and equip them with the skills to ask mathematical questions when studying machine learning algorithms. Classical supervised learning methods will be presented and studied using the tools from information theory, statistical learning theory, optimization, and basic functional analysis. The course will cover three categories of machine learning approaches: linear methods, kernel-based methods, and deep learning methods, each applied to regression, classification, and dimension reduction. Coding exercises will be an essential part of the course to empirically study strengths and weaknesses of methods.

Prerequisites

This class is intended for an advanced undergraduate or a first year Master’s student. We expect a strong command of probability, multivariable calculus, and linear algebra at the level of STAT 315/515, MATH 233, and MATH 545, or permission of instructor. Recommended: Familiarity of numerical methods at the level of MATH 551. Basic programming experience is assumed. You may program in any language, but most instructional scripts will be provided in Python.

Textbook

No required textbook. We will assign selected readings from Probabilistic Machine Learning by Murphy and The Elements of Statistical Learning by Hastie, Tibshirani, and Friedman.

We will be reading selections from

- Probabilistic Machine Learning: An Introduction by Kevin P. Murphy

- Gaussian processes for Machine Learning by Carl Edward Rasmussen and Christopher K. I. Williams

- The Elements of Statistical Learning by Trevor Hastie, Robert Tibshirani, and Jerome Friedman.

Supplementary readings will be provided for topics not covered in the textbooks.

Homework & Grading (subject to change!)

Your grade will be determined by 8 problems sets and a final project.

- Homework: 56%

- Quiz (February 28): 9%

- Minute papers: 5%

- Project proposal (Due April 5): 10%

- Project report (Due May 15): 20%

Each homework assignment is worth 8% of the final grade, with a cap of 56% over all 8 assignments. Each problem set will consist of derivations, proof–based questions, and numerical exploration and experimentation of machine learning algorithms.

MATH 590STA is an experimental course, meaning that feedback will be invaluable for future iterations of this course. Therefore, the instructors ask that students complete short reflections after each class on what they learned and how effectively lectures and homework contributed to their learning. Attendance of lectures will be crucial for receiving credit for minute papers.

An individual final project is required to pass the class. A project proposal will be due on April 5. Poster sessions showcasing each students’ work will take place on the last week of class May 6, 8, and 10. The final project report (7-10 pages) will be due on May 15. A list of suggested projects as well as guidelines for the proposal, final report, and poster session will be released early in the semester. Students are also encouraged to choose their own project with approval from the instructors.

Subject outline

Part 0: Introduction to machine learning and linear algebra review

- Introduction and motivation; Tour of machine learning topics and methods. Supervised versus unsupervised learning, regression, classification, dimension reduction.

- Review of linear algebra; vector and matrix norms, singular value decomposition, Moore-Penrose pseudoinverse, Eckhart-Young theorem, low rank approximations, condition numbers

Part 1: Linear methods and foundations

- Linear least squares regression; normal equations, SVD, conditioning

- Maximum likelihood estimation and information theory; entropy, Kullback-Leibler divergence, minimum–variance unbiased estimators, Cramer-Rao bound, best linear unbiased estimators, Gauss–Markov theorem

- Model selection and generalization; bias–variance tradeoff, cross validation

- Bayesian inference; Maximum a posteriori estimators, conjugate priors

- Regularization; underdetermined least squares, rank-deficient least squares, Tikhonov regularization, ridge and LASSO regression, compressed sensing, sparsity, Gauss and Laplace priors

- Linear dimension reduction; principal components analysis, principal components regression, Johnson-Lindenstrauss lemma

- Optimization methods; gradient descent, stochastic gradient descent Newton’s method, constrained optimization, KKT conditions

- Linear discriminant analysis, Logistic regression, perceptrons, separating hyperplanes

Part 2: Kernel methods and learning theory

- Support vector machines; linear SVMs, kernel SVMs

- Learning theory; model complexity, PAC learning, Vapnik–Chervonenkis dimension, Rademacher complexity, generalization

- Reproducing kernel Hilbert spaces; Hilbert spaces, Mercer’s theorem

- Nonparametric regression; Derivative regularization, Sobolev spaces, representer theorem

- Gaussian processes; Karhunen-Loeve expansion, Nystrom methods, Mercer kernels, Gaussian process regression, model selection, marginal likelihood

- Kernels for large datasets; Random Fourier features

- Nonlinear dimension reduction; kernel PCA

Part 3: Deep learning methods

- Introduction to neural networks; multilayer perceptrons, neural network architectures, activation functions, backpropagation

- Regularization; double descent phenomena, model complexity, model selection

- Nonlinear dimension reduction; autoencoders

- Theory of deep learning; universal approximation theorems, connections to kernel methods